Locking the Vault: The Risks of Memory Data Residue

Introduction

Most of today’s applications handle sensitive data like passwords, cryptographic keys or any other confidential information. Managing these secrets is a fundamental responsibility of any application. However, what happens to them when they’re no longer needed? Their disposal becomes a non-negotiable aspect of data security as leaving traces can open a door for potential attackers. For instance, this led to CVE-2021-32942 or more recently CVE-2023-32784 affecting KeePass.

In this post we will delve into the technical aspects of securely erasing sensitive data from memory, outlining why it’s essential and how to do it correctly. We’ll explore the often-underestimated risks associated with leaving remnants of such information in volatile memory and provide practical methods to ensure they are eradicated completely.

Background on Memory Handling

Before delving into the intricacies of securely erasing sensitive data from memory, it’s important to grasp the fundamentals of how data is stored during a program’s execution.

Programs primarily rely on RAM (Random Access Memory) to store data. It’s essential to understand that everything loaded into memory is only accessible during the execution of the program. Once the program terminates, the data stored in memory is released but not cleared. RAM retains the data it holds until that portion of memory is overwritten or until the computer’s power is cycled. This means that data, including sensitive information, can potentially linger in RAM even after a program has terminated.

In computing, memory is divided into several segments, some of which are used to store volatile data during the program’s execution :

-

Stack: The stack is a region of memory where the program stores data on the principle of Last-In, First-Out (LIFO). This means that the most recently added item is the first to be removed. In the context of program execution, the stack is responsible for managing function calls, local variables, and various control structures. When a function is called, a new stack frame is created to store information about that function’s execution, including parameters and local variables. Each function call adds a new stack frame to the top of the stack, and when the function completes, its stack frame is removed from the top. Data in the stack is thus typically short-lived and frequently overwritten. While the frequent overwriting of data on the stack can help mitigate some security risks, it doesn’t entirely eliminate the need to securely erase sensitive information from it.

-

Heap: The heap is an area used for dynamic memory allocation, where the program can request memory for objects whose size is not known until runtime. Data in the heap is more flexible but requires manual memory management to ensure proper allocation and deallocation. When memory is deallocated (freed), it typically returns it to the heap manager, but this doesn’t necessarily mean the data is immediately cleared or overwritten. It can still exist in memory until that memory space is reused by other allocations. This behavior can potentially lead to security risks, as sensitive data may remain in memory even after it has been freed, awaiting eventual overwriting by new allocations.

-

BSS (Block Started by Symbol): The BSS segment is a region of memory that stores uninitialized data and is typically used for static variables. The length of the BSS section is stored in the object file and memory for this section is allocated when the program is loaded.

While these principles are often consistent across various operating systems like Linux, Windows, and macOS, this post will concentrate on the Linux environment with a focus on data storage on the stack and on the heap.

Dumping memory

On Linux, there is a special directory created at /proc/<pid>/, which aggregates a wealth of information about a running process identified by the given PID (Process ID). This directory provides a valuable resource for inspecting various aspects of a running process, including:

- Command line: You can query the command line used to launch the process, offering insights into how the program was invoked.

cat /proc/275/cmdline | tr '\0' '\n'

# self is used to refer to the current process, in this case "cat"

cat /proc/self/cmdline | tr '\0' '\n'- Environment variables: The directory also provides access to the environment variables associated with the running process, revealing environment-specific configurations and settings.

cat /proc/275/environ | tr '\0' '\n'- Virtual memory mappings: One of the most powerful features of this directory is the ability to access the virtual memory mappings of the process. This allows you to examine the layout of memory within the process’s address space, including code, shared libraries, heap and stack segments.

cat /proc/275/mapsThe last command is particularly interesting in our case as it reveals the addresses of the memory we will want to dump. For example, we can see where the stack, the heap and the BSS are located :

# .bss in somewhere here

5601f7ddb000-5601f7ddc000 rw-p 00003000 00:90 792 /host/a.out

# heap

5601f9d03000-5601f9d24000 rw-p 00000000 00:00 0 [heap]

# stack

7ffd46dfd000-7ffd46e1e000 rw-p 00000000 00:00 0 [stack]This can be further confirmed in gdb :

gdb -p 275

info file

# 0x00005601f7ddb010 - 0x00005601f7ddb018 is .bss

# the following command can be used to read /proc/<pid>/maps from gdb

info proc mappingsDue to ASLR (Address Space Layout Randomization), it’s important to note that the memory addresses of the stack and the heap can vary between each program execution. ASLR is a security feature implemented in modern operating systems to randomize the locations of these memory regions, making it harder for potential attackers to predict where specific data might reside in memory.

Now that we know where our data is stored, we can read this memory using the convenient /proc/<pid>/mem endpoint. However, it’s essential to note that accessing this file requires superuser privileges. Typically, you need to be the root user.

ls -la /proc/275/mem

# -rw------- 1 root root 0 Oct 8 16:41 /proc/275/memThe reason why we needed to find the memory addresses is because we cannot simply read this endpoint using a program like cat. Instead, we have to open the file, seek to the start of a readable memory segment, and then read the data.

Here is a simple Python program to dump a specific section of memory to a file :

memdump.py

#! /usr/bin/env python3

import re

import sys

PID = sys.argv[1]

region = sys.argv[2]

maps_file = open(f"/proc/{PID}/maps", 'r')

mem_file = open(f"/proc/{PID}/mem", 'rb', 0)

output_file = open("dump.raw", 'wb')

for line in maps_file.readlines(): # for each mapped region

m = re.match(r'([0-9A-Fa-f]+)-([0-9A-Fa-f]+) ([-r]).*\[([a-z]+)\]', line)

# if this is a readable region corresponding to the one we are looking for

if m is not None and m.group(3) == 'r' and m.group(4) == region:

start = int(m.group(1), 16)

end = int(m.group(2), 16)

print(f"{hex(start)}-{hex(end)}")

mem_file.seek(start) # seek to region start

chunk = mem_file.read(end - start) # read region contents

output_file.write(chunk) # dump contents to standard output

maps_file.close()

mem_file.close()

output_file.close()

# Usage:

# python3 memdump.py 275 stack

# python3 memdump.py 275 heapFinding remnant secrets

To illustrate that secrets can linger in memory if not properly erased, we will use a basic C program :

example.c

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

int menu() {

int choice;

printf("Menu:\n");

scanf("%d", &choice);

return choice;

}

void doSmth() {

// do something

}

int main(int argc, char **argv) {

while (1)

{

switch (menu())

{

case 1:

doSmth();

break;

case 2:

printf("Exiting...\n");

return 0;

default:

printf("Invalid choice.\n");

break;

}

}

}We will adapt the doSmth function for our examples. Remember that the program needs to be running in order to dump the memory, that’s why we use a loop and only exit when requested.

On the stack

In this example, we will simulate a fake login function, which highlights how secrets can be stored on the stack and potentially remain in memory.

void doSmth() {

char username[256];

char password[128];

printf("Input username:\n");

read(0, username, 256);

printf("Input password:\n");

read(0, password, 128);

// do the username and password check then return

}In this function, the username and password variables are local, which means they are stored on the stack. Stack variables do not need to be explicitly freed at the end of the function.

Let’s compile then run the program, input some information and dump the memory afterwards :

# gcc stack.c && ./a.out

Menu:

1

Input username:

myusername

Input password:

mysupersecretpassword

Menu:At this point the doSmth function has returned. In a new terminal we dump the content of the stack and look for our inputs :

# python3 memdump.py `pgrep a.out` stack

# strings dump.raw

GLIBC_PRIVATE

early_init

strnl

mysupersecretpassword

zLox8

R=x86_64

./a.out

HOSTNAME=docker-desktop

...We successfully recovered our password ! But as you can see, the username is not present, why ?

As previously discussed, the stack’s LIFO structure results in frequent overwriting of data. In this specific case, the use of scanf in the menu function introduced complexities that led to the partial overwrite of the stack. This partial overwrite rendered the username irrecoverable, illustrating how this behaviour can mitigate the security risks but doesn’t entirely eliminate the need for securely erasing sensitive information from the stack.

On the heap

In this example, we have a C program that utilizes dynamically allocated memory on the heap to store sensitive information, including a username and password.

typedef struct creds_t {

char username[256];

char password[128];

} creds_t;

void doSmth() {

creds_t *creds = (creds_t *) malloc(sizeof(creds_t));

printf("Input username:\n");

read(0, creds->username, 256);

printf("Input password:\n");

read(0, creds->password, 128);

// do the username and password check

// free the credentials

free(creds);

}In this function, a creds_t structure is dynamically allocated on the heap to store the username and password. After inputting the data, the program performs the necessary checks then releases the allocated memory using the free function.

Despite the deallocation of memory with free, the sensitive information may continue to exist in memory.

# python3 memdump.py `pgrep a.out` heap

# strings dump.raw

Menu:

password:

mysupersecretpasswordAs with the previous example, we have successfully recovered the password in this scenario. However, the username’s absence can be attributed to how GLIBC’s heap implementation represents freed memory chunks. In this particular case, the first 16 bytes of the freed memory chunk are overwritten by two pointers, resulting in the partial loss of the username.

In the following sections, we will cover methods and techniques for securely erasing sensitive data from memory.

Erasing sensitive information

As we’ve witnessed in previous examples, sensitive information can persist in memory if not disposed of properly.

A common approach to wipe secrets is overwriting them with zeros, a process often referred to as “zeroization.” Zeroization ensures that sensitive data is replaced with a known, non-sensitive value, rendering it unreadable and unrecoverable.

To demonstrate the effectiveness of zeroization, let’s implement this technique in the previous heap-based example.

void clean_free_creds(creds_t *creds) {

memset(creds, 0, sizeof(creds_t));

free(creds);

}

void doSmth() {

creds_t *creds = (creds_t *) malloc(sizeof(creds_t));

printf("Input username:\n");

read(0, creds->username, 256);

printf("Input password:\n");

read(0, creds->password, 128);

// do the username and password check then return

// free the credentials properly

clean_free_creds(creds);

}This time we chose to code a helper function that overwrites the whole creds_t structure with zeros before freeing it. This should prevent information leakage. Let’s verify.

# python3 memdump.py `pgrep a.out` heap

# strings dump.raw

Menu:

password:No sensitive information in memory ! We have done it, or have we?

Compiler optimization

Previously we compiled the code using gcc heap_clean.c. Because of this, no compiler optimizations are taking place but most likely, in real projects some optimizations might by applied.

To enable compiler optimizations, you can use the -O flag followed by an optimization level, such as -O1, -O2, or -O3, with higher numbers indicating higher levels of optimization.

We retry dumping memory after enabling the first level of optimizations.

# gcc heap_clean.c -O1 && ./a.out

# python3 memdump.py `pgrep a.out` heap

# strings dump.raw

Menu:

password:

mysupersecretpasswordDespite our explicit use of memset to overwrite the password, it remains visible in memory. This occurrence highlights a compelling reality in software development — relying solely on the source code may not always guarantee the expected behavior. Compiler optimizations, like the one encountered here, can sometimes take shortcuts. To gain deeper insights into this phenomenon, we can utilize the gdb debugger to dissect the clean_free_creds function at the instruction level.

# disass clean_free_creds

Dump of assembler code for function clean_free_creds:

0x0000000000001241 <+0>: endbr64

0x0000000000001245 <+4>: sub rsp,0x8

0x0000000000001249 <+8>: call 0x10a0 <free@plt>

0x000000000000124e <+13>: add rsp,0x8

0x0000000000001252 <+17>: ret

End of assembler dump. The absence of memset in the disassembled code reveals a significant compiler optimization. It appears that the compiler has effectively rewritten our clean_free_creds function by eliminating what it deemed as unnecessary operations. The optimized code can be summarized as follows:

void clean_free_creds(creds_t *creds) {

// The compiler decided the next line is useless.

// memset(creds, 0, sizeof(creds_t));

free(creds);

}You can compare with the disassembled version without optimizations and see that memset is called in that case.

To protect against unwanted compiler optimizations we will use the volatile keyword. This keyword in C and C++ is used to inform the compiler that a particular variable’s value can change unexpectedly due to external factors, and therefore, the compiler should not optimize its access. This however requires rewritting the clean_free_creds function without using memset.

void clean_free_creds(creds_t *creds) {

size_t size = sizeof(creds_t);

volatile unsigned char *p = creds;

while (size--){

*p++ = 0;

}

free(creds);

}If possible you might want to use the alternative memset_explicit function.

This approach is used by the famous libsodium library.

We retry dumping memory after applying this technique and enabling the highest level of optimizations.

# gcc heap_clean.c -O3 && ./a.out

# python3 memdump.py `pgrep a.out` heap

# strings dump.raw

Menu:

password:# disass clean_free_creds

Dump of assembler code for function clean_free_creds:

0x0000000000001300 <+0>: endbr64

0x0000000000001304 <+4>: lea rcx,[rdi+0x180] # Load the end address of memory to clear into rcx

0x000000000000130b <+11>: mov rax,rdi # Copy the start address of memory to rax

0x000000000000130e <+14>: xchg ax,ax

0x0000000000001310 <+16>: mov rdx,rax # Copy the start address to rdx

0x0000000000001313 <+19>: add rax,0x1

0x0000000000001317 <+23>: mov BYTE PTR [rdx],0x0 # Zeroize the current byte in memory

0x000000000000131a <+26>: cmp rax,rcx # Compare loop counter with end address

0x000000000000131d <+29>: jne 0x1310 <clean_free_creds+16> # If not equal, continue the loop

0x000000000000131f <+31>: jmp 0x10a0 <free@plt>

End of assembler dump.This assembly code represents a loop that iterates through the memory block and sets each byte to 0. It ensures that the entire memory block is effectively zeroized.

The OpenSSL library uses another approach. Instead of declaring a volatile pointer to the memory area to clear, it uses a volatile pointer to the memset function itself.

typedef void *(*memset_t)(void *, int, size_t);

static volatile memset_t memset_func = memset;

void clean_free_creds(creds_t *creds) {

memset_func(creds, 0, sizeof(creds_t));

free(creds);

}The resulting disassembly is shown below for comparison.

# disass clean_free_creds

Dump of assembler code for function clean_free_creds:

0x0000000000001300 <+0>: endbr64

0x0000000000001304 <+4>: push rbp

0x0000000000001305 <+5>: mov rbp,rdi

0x0000000000001308 <+8>: mov rax,QWORD PTR [rip+0x2d01] # 0x4010 <memset_func>

0x000000000000130f <+15>: mov edx,0x180 # Set the length of the memory area to clear (384 bytes)

0x0000000000001314 <+20>: xor esi,esi # Overwrite with 0

0x0000000000001316 <+22>: call rax # Indirect call to memset_func (effectively calls memset)

0x0000000000001318 <+24>: mov rdi,rbp

0x000000000000131b <+27>: pop rbp

0x000000000000131c <+28>: jmp 0x10a0 <free@plt>

End of assembler dump.In this code, the key part is the indirect call to memset_func. It loads the address of the memset function via the memset_func pointer and calls it with the appropriate parameters to clear the memory.

We’ve explored several ways of securely clearing memory to ensure sensitive data is effectively erased. Now, let’s move on to understanding the consequences of not clearing data correctly.

The possible consequences

Previously, we explored the risk of secret exposure through direct memory dumps. We witnessed how sensitive information, like passwords and cryptographic keys, can be left exposed in memory, making them vulnerable to unauthorized access and potential exploitation by attackers. It’s important to note that performing memory dumps typically requires privileged access to the machine and is not a feasible attack vector for all potential adversaries.

We will explore other scenarios where sensitive data exposure may not necessitate privileged access to a system. These scenarios include instances where attackers can exploit vulnerabilities in an application’s code.

Secrets in unexpected locations

Inadequate memory clearance may result in secrets, such as passwords or cryptographic keys being inadvertently reallocated and stored in new memory locations.

To illustrate this concept, let’s consider a C code example where a user can log in, compose messages, and preview them.

typedef struct creds_t {

char username[256];

char password[128];

} creds_t;

typedef struct msg_t {

char message[256];

char signature[128];

} msg_t;

void doSmth() {

creds_t *creds = (creds_t *) malloc(sizeof(creds_t));

printf("Input username:\n");

read(0, creds->username, 256);

printf("Input password:\n");

read(0, creds->password, 128);

// do the username and password check

// free the credentials

free(creds);

// we allocate a msg_t struct

msg_t *msg = (msg_t *) malloc(sizeof(msg_t));

printf("Input message:\n");

read(0, msg->message, 256);

// suppose the signature is only appended optionnaly

// print the message and the signature

printf("message : %s\n", msg->message);

printf("signature: %s\n", msg->signature);

free(msg);

}In the code example provided, all the components are contained within a single function. However, in a real-world application, these components might be scattered across different sections of the code and triggered by various user actions. This complexity can create a challenging vulnerability to detect and trigger.

The function begins by allocating memory for the creds_t structure using malloc, then stores the provided credentials in the allocated memory. Afterward, the function frees the memory using free. As shown previously, the password is still present in memory.

The function then allocates memory for the msg_t structure using malloc. An important point to note is the inner workings of GLIBC’s heap implementation: the last freed memory region of a matching size is given priority by malloc. In this case, because both structures have the same size, the same memory region previously used for the creds_t structure is repurposed to represent a msg_t structure.

Crucially, the signature member of msg_t is located at the same offset as the password member of creds_t. As the example assumes that message signing is an optional feature, only the message member is overwritten. This means that the signature still references the password data in memory.

Consequently, the password can be displayed directly in the message preview, highlighting a potential security risk that could arises due to incomplete memory clearance.

message : my test message

signature: mysupersecretpasswordThis situation is partially due to the fact that the signature member is never initialized.

Another potential avenue for the password to inadvertently leak in this scenario arises due to the absence of a null byte at the end of the message. When it reaches its maximum length, it terminates right where the password begins, effectively treating the password as an integral part of the message.

Input message:

AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

message : AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAmysupersecretpasswordIn summary, we’ve illustrated that in certain cases, a traditional memory dump is not required to inadvertently expose sensitive information. Incomplete memory clearance and unforeseen data interactions, such as in our example, can lead to data leakage.

Remote Code Execution

In a manner similar to the previous example, we will present a scenario resulting in Remote Code Execution (RCE). While this scenario might seem far-fetched at first glance, such situations are still within the realm of possibility and can pose severe risks when they do occur.

typedef struct creds_t {

char username[256];

char password[128];

} creds_t;

typedef struct console_t {

char buffer[370];

// dynamic pointer to a display function

// suppose we have different functions with fancy features

// colors, logging, custom formating, ...

void (*display_func)(const char *);

} console_t;

void display(console_t *console) {

if (console->display_func == NULL) {

console->display_func = puts;

}

console->display_func(console->buffer);

}

void doSmth() {

creds_t *creds = (creds_t *) malloc(sizeof(creds_t));

printf("Input username:\n");

read(0, creds->username, 256);

printf("Input password:\n");

read(0, creds->password, 128);

// do the username and password check

// free the credentials

free(creds);

console_t *console = (console_t *) malloc(sizeof(console_t));

printf("Input message:\n");

read(0, console->buffer, 256);

display(console);

free(console);

}Like in the previous example, the complexity of a real-world application can create a challenging vulnerability to detect and trigger.

The sequence of events is similar to the previous scenario.

The display_func pointer of console_t is located in the same memory region as the password member of creds_t. If the password is sufficiently long, part of it will be interpreted as the function pointer value and likely cause a segmentation fault but, more critically, can create a potential avenue for unauthorized code execution.

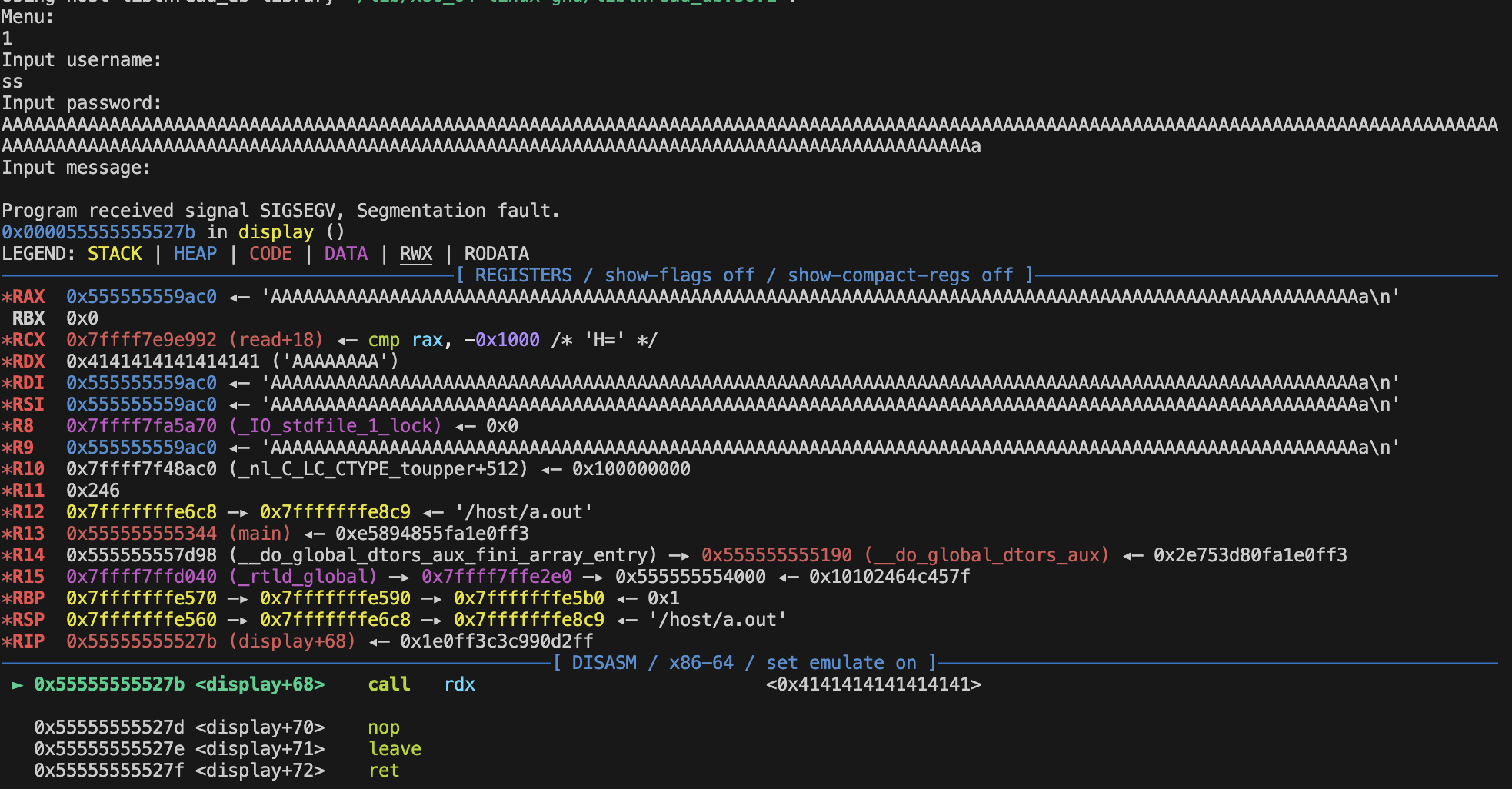

This can be confirmed in gdb :

Here we see that the RDX register holds the value pointed by display_func, which in this case is 0x4141414141414141 and not the address of puts. This value (the integer representation of “AAAAAAAA”), derived from the user-supplied password, can serve as a means for an attacker to manipulate the program’s execution flow, potentially leading to remote code execution.

Higher-level languages

In higher-level programming languages, sensitive data wiping becomes a more complex task due to the abstractions and automated memory management they offer. Unlike low-level languages like C, where programmers have granular control over memory allocation and deallocation, higher-level languages abstract many of these low-level details. In languages like Python, Java, or C#, memory is typically managed by a runtime environment or virtual machine. Variables are automatically allocated and deallocated. While this abstraction can simplify software development, it also introduces challenges when it comes to securely erasing sensitive data.

Our focus will primarily be on Python and Java. We’ll cover examples from both languages, examining how they handle memory and whether sensitive information can be effectively cleared or if it leaves traces that can be exploited.

We start by adapting our memory dump script to focus on a different task: searching for a specific value, often referred to as the “needle”, within all the mapped memory regions. This adaptation is necessary due to the intricate nature of memory maps in more complex applications. The data we seek may be distributed across multiple memory regions and locations within the process’s memory space.

memsearch.py

#! /usr/bin/env python3

import re

import sys

PID = sys.argv[1]

needle = sys.argv[2]

if needle.startswith("0x"):

needle = bytes.fromhex(needle[2:])

else:

needle = needle.encode()

maps_file = open(f"/proc/{PID}/maps", 'r')

mem_file = open(f"/proc/{PID}/mem", 'rb', 0)

for line in maps_file.readlines(): # for each mapped region

m = re.match(r'([0-9A-Fa-f]+)-([0-9A-Fa-f]+) ([-r])', line)

if m is not None and m.group(3) == 'r': # if this is a readable region

start = int(m.group(1), 16)

end = int(m.group(2), 16)

mem_file.seek(start) # seek to region start

try:

chunk = mem_file.read(end - start) # read region contents

if needle in chunk:

print(f"Found needle in {line.strip()}")

except OSError:

break

maps_file.close()

mem_file.close()Python

Here’s a simple program that uses the PyCryptodome library to encrypt user data. This program will serve as an example of how sensitive information is managed in Python.

example_pycryptodome.py

from Crypto.Cipher import ChaCha20_Poly1305

from Crypto.Protocol.KDF import scrypt

from Crypto.Random import get_random_bytess

def test():

password = input("password: ")

# mysupersecurepassword

# salt = get_random_bytes(24)

# we harcode it so the key is constant for the tests

salt = b'K\n\xac\xdb\xa754V\x87\xe0+\xfc\x13}W\xfbM\xfcA\xfc\xda\xfa\xffA'

key = scrypt(password, salt, 32, N=2**14, r=8, p=1)

# fcb62d22fba4ddf97224e954de703a448cfeb6b8689dd7eb622154fb4b144ebc

message = input("message: ").encode()

cipher = ChaCha20_Poly1305.new(key=key)

ciphertext, tag = cipher.encrypt_and_digest(message)

print(f"Your encryted message:\n{ciphertext=}\n{tag=}\n{salt=}")

test()

# wait for dump

input()This program takes a user-provided password, derives a key from it using scrypt with a salt, and encrypts a user-provided message using the ChaCha20-Poly1305 encryption algorithm. The salt is hardcoded for testing consistency. After encryption, it displays the ciphertext, tag, and salt for demonstration purposes.

After running the program, our only hope is that Python’s garbage collection and memory management mechanisms remove sensitive data from memory once it goes out of scope. However, there’s no guarantee that this process will securely erase the data. In the upcoming tests, we will examine whether Python’s handling of local variables results in the secure erasure of sensitive information or if remnants of data are left in memory.

# python3 memsearch.py `pgrep python3` mysupersecurepassword

Found needle in 7fe4af963000-7fe4afbc5000 rw-p 00000000 00:00 0

# python3 memsearch.py `pgrep python3` 0xfcb62d22fba4ddf97224e954de703a448cfeb6b8689dd7eb622154fb4b144ebc

Found needle in 55db116d2000-55db1197d000 rw-p 00000000 00:00 0 [heap]

Found needle in 7fe4af4a8000-7fe4af7f1000 rw-p 00000000 00:00 0These findings indicate that sensitive data, such as the password and the derived key, are still present in the memory even after the function execution has completed. This raises concerns about the secure handling of sensitive information in Python, as it appears that the data is not completely cleared from memory.

Adding the del keyword to remove variables explicitly is a common recommendation to help clear memory in Python. By using del, you can indicate that you no longer need a specific variable, and Python may release the associated memory. Let’s add this keyword to remove the variables used in the test function to see if it has an impact on memory clearance:

del key

del password

del cipherAfter making these changes, we can run the program and check if sensitive data is still present in memory.

# python3 memsearch.py `pgrep python3` mysupersecurepassword

Found needle in 7f5fc6b87000-7f5fc6de9000 rw-p 00000000 00:00 0

# python3 memsearch.py `pgrep python3` 0xfcb62d22fba4ddf97224e954de703a448cfeb6b8689dd7eb622154fb4b144ebc

Found needle in 55b95e064000-55b95e311000 rw-p 00000000 00:00 0 [heap]

Found needle in 7f5fc66cc000-7f5fc6a15000 rw-p 00000000 00:00 0Nothing changed. We can try forcing garbage collection in Python using the gc module. By invoking gc.collect(), we explicitly request Python’s garbage collector to perform a collection cycle. This may help in releasing memory occupied by objects that are no longer needed.

#import gc

del key

del password

del cipher

gc.collect()Let’s check again if sensitive data is still present in memory.

# python3 memsearch.py `pgrep python3` mysupersecurepassword

Found needle in 7f6f52ef2000-7f6f52ff4000 rw-p 00000000 00:00 0

# python3 memsearch.py `pgrep python3` 0xfcb62d22fba4ddf97224e954de703a448cfeb6b8689dd7eb622154fb4b144ebc

Found needle in 55df212f1000-55df2159f000 rw-p 00000000 00:00 0 [heap]

Found needle in 7f6f52b5e000-7f6f52ea7000 rw-p 00000000 00:00 0That did not help either. Maybe we can achieve better results if we don’t use variables at all.

ciphertext, tag = ChaCha20_Poly1305.new(key=scrypt(input("password: "), salt, 32, N=2**14, r=8, p=1)).encrypt_and_digest(message)Let’s check again.

# python3 memsearch.py `pgrep python3` mysupersecurepassword

Found needle in 5606dd854000-5606ddafe000 rw-p 00000000 00:00 0 [heap]

# python3 memsearch.py `pgrep python3` 0xfcb62d22fba4ddf97224e954de703a448cfeb6b8689dd7eb622154fb4b144ebc

Found needle in 5606dd854000-5606ddafe000 rw-p 00000000 00:00 0 [heap]We limited the occurences of the key to a single one, but our sensitive data is still there somewhere.

At this point, I began to question whether the observed behavior was due to the way the PyCryptodome library handles sensitive information. This was somewhat surprising, considering the library’s popularity and reputation for security. To investigate further, I decided to rewrite the code using the well-established PyNaCl library, which serves as Python bindings for the libsodium cryptographic library and for which I know it clears sensitive information from memory.

example_pynacl.py

from nacl.secret import SecretBox

from nacl.pwhash import scrypt

from nacl.utils import random

def test():

password = input("password: ").encode()

# mysupersecurepassword

# salt = random(32)

# we harcode it so the key is constant for the tests

salt = b'1\xfe5\x80\xc0\xc3\x01\nv\x84@8\x89|\x05TY.\x13\x81\x1b\xa5O\xc8,lH\xf1O-\xb7 '

key = scrypt.kdf(32, password, salt)

# 8440581e8c3818c9281f4e7a2c40348a69f9a648db5eb0a1ec475a38b536c389

message = input("message: ").encode()

cipher = SecretBox(key=key)

encrypted = cipher.encrypt(message, nonce=random(24))

ciphertext = encrypted.ciphertext

nonce = encrypted.nonce

print(f"Your encryted message:\n{ciphertext=}\n{nonce=}")

test()

# wait for dump

input()We will retry the test without the del keyword and explicit garbage collection.

# python3 memsearch.py `pgrep python3` mysupersecurepassword

Found needle in 5599a3740000-5599a3871000 rw-p 00000000 00:00 0 [heap]

Found needle in 7faf64d2e000-7faf650b5000 rw-p 00000000 00:00 0

# python3 memsearch.py `pgrep python3` 0x8440581e8c3818c9281f4e7a2c40348a69f9a648db5eb0a1ec475a38b536c389

Found needle in 5599a3740000-5599a3871000 rw-p 00000000 00:00 0 [heap]

Found needle in 7faf64d2e000-7faf650b5000 rw-p 00000000 00:00 0The results are identical. Now we add the del keyword and ask for explicit garbage collection.

#import gc

del key

del password

del cipher

gc.collect()Let’s check again.

# python3 memsearch.py `pgrep python3` mysupersecurepassword

Found needle in 7fc18a980000-7fc18ad07000 rw-p 00000000 00:00 0

# python3 memsearch.py `pgrep python3` 0x8440581e8c3818c9281f4e7a2c40348a69f9a648db5eb0a1ec475a38b536c389

Found needle in 55ddc2d9d000-55ddc2ecb000 rw-p 00000000 00:00 0 [heap]

Found needle in 7fc18a980000-7fc18ad07000 rw-p 00000000 00:00 0That did not help much. Now without variables.

encrypted = SecretBox(key=scrypt.kdf(32, input("password: ").encode(), salt)).encrypt(message, nonce=random(24))Let’s check once again.

# python3 memsearch.py `pgrep python3` mysupersecurepassword

Found needle in 55b6be26d000-55b6be399000 rw-p 00000000 00:00 0 [heap]

# python3 memsearch.py `pgrep python3` 0x8440581e8c3818c9281f4e7a2c40348a69f9a648db5eb0a1ec475a38b536c389

Found needle in 55b6be26d000-55b6be399000 rw-p 00000000 00:00 0 [heap]We get the exact same behaviour as with PyCryptodome, so the issue might stem from how Python itself handles memory.

Concluding our investigation, it’s evident that there is little we can do to clear sensitive data from memory effectively in Python. The question remains: is this limitation a cause of concern? The answer depends on your particular threat model and security requirements.

While it’s true that Python’s memory management may leave traces of sensitive information behind, the significance of this issue varies. For applications dealing with highly sensitive data or operating in high-security environments, this could be a point of concern. In contrast, for many everyday use cases and applications, the risk may be deemed acceptable.

Ultimately, understanding your specific security needs, the nature of your application, and the level of risk you’re willing to tolerate is crucial in determining whether this limitation in Python’s memory management is a critical concern or a manageable one.

Java

In this section, we’ll perform a similar experiment to our Python exploration, but with Java, to assess how well it manages sensitive data in memory.

Let’s begin with a simple Java program designed to encrypt user data using the javax cryptographic libraries.

PasswordBasedEncryption.java

import javax.crypto.*;

import javax.crypto.spec.*;

import java.security.*;

import java.security.spec.*;

import java.util.Base64;

import java.util.Scanner;

public class PasswordBasedEncryption {

public static void test(Scanner scanner) throws Exception {

System.out.print("Enter your password : ");

// mysupersecurepassword

String password = scanner.nextLine();

// hardcode the salt so the key is constant

byte[] salt = new byte[] { 0x01, 0x02, 0x03, 0x04, 0x05, 0x06, 0x07, 0x08, 0x09, 0x0A, 0x0B, 0x0C, 0x0D, 0x0E, 0x0F, 0x10 };

SecretKeyFactory factory = SecretKeyFactory.getInstance("PBKDF2WithHmacSHA256");

KeySpec spec = new PBEKeySpec(password.toCharArray(), salt, 10000, 256);

SecretKey secretKey = new SecretKeySpec(factory.generateSecret(spec).getEncoded(), "AES");

// 25ba24bb7e7243e7750a354b007fb35f507b08ac263b0fb16b019236e7b6e7f0

Cipher cipher = Cipher.getInstance("AES/GCM/NoPadding");

cipher.init(Cipher.ENCRYPT_MODE, secretKey);

System.out.print("Enter the message to encrypt : ");

String message = scanner.nextLine();

byte[] encryptedText = cipher.doFinal(message.getBytes("UTF-8"));

System.out.println("Encrypted message : " + Base64.getEncoder().encodeToString(encryptedText));

System.out.println("Salt : " + Base64.getEncoder().encodeToString(salt));

}

public static void main(String[] args) throws Exception {

Scanner scanner = new Scanner(System.in);

test(scanner);

// wait for dump

scanner.nextLine();

scanner.close();

}

}This program takes user input for a password and a message to encrypt. It derives a secret key using PBKDF2 with HMAC SHA-256, utilizing the password and a hardcoded salt for testing consistency. The program then encrypts the message using AES-GCM and prints the encrypted message and the salt.

The code is similar to our Python example but written in Java.

Let’s proceed with the memory analysis for our Java program.

# python3 memsearch.py `pgrep java` mysupersecurepassword

Found needle in c2800000-c6600000 rw-p 00000000 00:00 0

# python3 memsearch.py `pgrep java` 0x25ba24bb7e7243e7750a354b007fb35f507b08ac263b0fb16b019236e7b6e7f0

Found needle in c2800000-c6600000 rw-p 00000000 00:00 0While it might not be entirely surprising, our experiment revealed that both the encryption key and password were present in memory.

Given our previous experience with Python, where explicitly clearing memory didn’t seem to help, it would be interesting to see if invoking the Java garbage collector would yield different results.

// delete key and password by setting them to null

secretKey = null;

password = null;

// call the garbage collector

System.gc();Let’s see if it makes a difference.

# python3 memsearch.py `pgrep java` mysupersecurepassword

# python3 memsearch.py `pgrep java` 0x25ba24bb7e7243e7750a354b007fb35f507b08ac263b0fb16b019236e7b6e7f0

Found needle in c4d00000-c4e00000 rw-p 00000000 00:00 0Unlike in Python, explicitly calling the garbage collector in Java had a positive impact on the removal of the password from memory. However, it is still insufficient to ensure the proper deletion of all sensitive material, as the encryption key could still be recovered.

Ultimately, we arrive at the same conclusion as for Python. The challenge of securely erasing sensitive data from memory in high-level languages like Python and Java persists. Whether or not this risk is tolerable depends on your specific security needs. Fortunately, the OWASP’s MASTG project offers potential solutions. It provides an implementation of a SecureSecretKey class, a better alternative to the default SecretKey class, designed to clear data from memory. Additionally, it offers recommendations for securing user-provided data, such as implementing custom input methods. By adopting these practices, developers can enhance the security of applications handling sensitive information, reducing the risk of data remnants in memory.

Conclusion

Our exploration into the persistence of sensitive information in memory has revealed crucial insights. Incomplete memory clearance can result in wide-ranging consequences, including data leakage and potential remote code execution. We’ve explored methods to securely zero out sensitive data from memory and prevent compiler optimizations. However, it’s essential to acknowledge that specific higher-level programming languages have inherent limitations in memory management. Consequently, when developing applications, it’s paramount to make judicious language choices based on your unique threat model and security prerequisites.

At Stackered, we understand the importance of secure software development. Our team, specialized in code reviews and security assessments, can help you identify and fix vulnerabilities in your code. Contact us to ensure your applications are resilient against threats and your sensitive data remains protected.